I learned the new sorting algorithms quick sort and merge sort and Tim sort. In CSC108 I was introduced to the concept of sorting algorithms and learned Insertion sort, Selection sort and Bubble sort. I still am not very confident with Tim sort and quick sort but I do know that they are the fastest compared to the other sorting algorithms and I know Tim sort is a hybrid. Maybe if I continue "slogging" I'll update this with a more detailed understanding.

Merge Sort

Merge sort was fairly hard to grasp but I watched this really helpful youtube video(https://www.youtube.com/watch?v=EeQ8pwjQxTM). It's better when you actually see someone move physical objects around to understand the sorting types. Merge sort has n log n runtime in its worst case and that is totally understandable. Having to make single element lists then merge it into 2 then 4 then 6 and so on is fairly tedious and it doesn't matter if your list is sorted or not because the way merge sort works is that it will still go through all the steps in the best and worst case. Merge sort basically takes all the elements in a list, sorts them into smaller single element lists. You then merge the first 2 lists and place them in order from lowest to highest then the same with the next 2 until you have a bunch of lists that are each 2 elements long and in the event that it is odd you also have one single element lists. You now have a bunch of 2 element sorted list and in an odd length of the list an extra single element list. You now merge (this is why its called merge sort I assume.)the first list with the second and put them in order by comparing its smallest in the first list to the smallest in the second until the your left with one merged sorted list from the first 2 lists. You do this to the third with the fourth and keep following these steps until you have one sorted list left.

Insertion Sort

Insertion sort is better

Insertion sort is betterthan Merge sort because the runtime is affected whether your list is sorted or not. In the worst case Insertion sort has a runtime of n^2 because it has to

keep looking through the sorted list for a position of the next element in its worst case every single element will get shuffled every single step assuming the worst case is that it is backwards and sorted. In its best case it has a runtime of n because the list is already sorted and no shuffling is really needed because the element is just added to the end of the sorted list. When you start at index 0 in a list and consider that index sorted. You then look at index 1 or index 0 in the unsorted part of the list and and insert it either before or after index zero depending whether it is greater or less than that number. After that you would just keep moving down the list and inserting the next index where it fits in the sorted part.

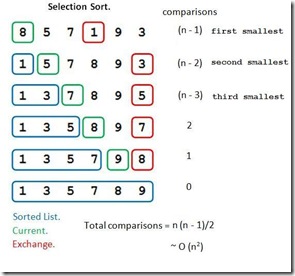

Selection Sort

Selection Sort is better than merge but in its best case worse off than insertion sort. The worst case runtime and best case run time is both n^2. This is because regardless if the list is sorted or not when you go through the entire list and and select the largest and place it at the end of the list or in its place the same amount of times. You then go and look through and get the largest in the unsorted list portion and place it right before the largest in the list. This repeats until you reach the end of the list.

Selection Sort is better than merge but in its best case worse off than insertion sort. The worst case runtime and best case run time is both n^2. This is because regardless if the list is sorted or not when you go through the entire list and and select the largest and place it at the end of the list or in its place the same amount of times. You then go and look through and get the largest in the unsorted list portion and place it right before the largest in the list. This repeats until you reach the end of the list.Bubble Sort